Note: this article it too long for the email distribution and needs to be read through the Substack app or through a web browser.

Introduction

In previous articles titled “The AI Revolution,” and “The History of AI (parts 1 and 2),” we presented the sudden surge in adoption and spread as well as a brief history of the technology through the lens of developments over the last 25 years, focusing mostly on the era of Big Tech and incremental progress toward today. This was to help answer the surprise that many experienced from a technology that seemed to have come from nowhere and seems to suddenly be everywhere without warning. However, that is really only part of the history, the part where the final breakthroughs have begun to emerge. But these recent years are neither the beginning, nor the end.

To fully understand the history of AI and the reality of what the technology is (and what it isn’t), we need to journey back to before the invention of the computer, because the computer itself was an attempt to achieve artificial intelligence. It’s that old of a concept. This article presents a brief review of the larger history so we can understand what AI is, and most importantly, what AI isn’t.

Bottom Line Up Front

The concept of Artificial Intelligence was formed out of the 1940s work of D.O. Hebb, who attempted to describe the cognitive functions of the human brain as a neural network and thereby established the science of neuropsychology.

The first computer built in the 1950s used the concept of a neural network to calculate artillery firing tables by accounting for different environmental variables and represented the first computational machine that used the architecture input, process, output, to replicate the work of man.

With the building of a machine that could replicate human cognitive functions via inputs that trigger layers of pattern processing and memory recollection in order to produce outputs, Engineers wondered if a machine could be built that could mimic human intelligence which gave way to the concept of artificial intelligence.

In the 1950s the Turing Test was used as the basis for testing if Artificial Intelligence could be achieved, by proposing that a computer that had the cognitive abilities of a human would be able to conduct conversations indistinguishable from other humans.

From the 1960s - 2010s the continued development of natural language processing, artificial neural networks, object and pattern recognition, machine learning, and distributed processing led to the eventual development of Large Language Models (LLMs) that we call AI today.

While the tech was being built, science fiction authors have explored the possibility, morality, and other implications of the concept which has embedded a mythology within our culture affecting how we perceive AI today.

LLMs are primarily designed to interpret natural language, derive an understanding from it, and generate responses that also mimic natural language.

The knowledge system used by LLMs is based on their ability to interpret language and relate that to other structures of information they have been exposed to and trained to “understand,” based largely on what they have been coded to pay attention to.

Generative AI is the component of LLMs that identifies patterns from text, images, or videos (objects) and can modify or continue the pattern based on variable inputs to produce something new.

The current AI Revolution is largely focused on the transition of knowledge, activity, and art to machines that can mimic the cognitive abilities of man and thus take on his work without him.

AI Revolutionaries see this as an opportunity to transcend the cognitive capabilities of man by building larger scale machines that have higher capacity than any one human individual. They see this as the dawn of a new era of humanity; possibly one that fundamentally changes what it means to be human.

One inherent flaw in AI is the assumption that we understand the cognitive functions of the human brain sufficiently to be able to re-create it artificially.

Modern neurologists and neuroscientists freely admit that we do not yet understand the human brain, nor how it actually works, while our religious heritage teaches that the essence of humanity is not held within our brain; it is within our soul and is often referred to as coming from our hearts.

AI becomes fundamentally immoral when it is regarded as a peer or a superior to humanity. Indeed it is largely mythology and the rejection of Christianity that has led the West to this stage of technological de-humanization.

A Simplified Definition of Artificial Intelligence

Today’s artificial intelligence should be understood as the eventual outcome formed from the pursuit of a neuroscience question first posed in the 1940s, later fabricated into a machine in the 1950s, and expanded decade by decade thereafter through near continuous advancements in computer technology and deeper studies in neuroscience theories until it was (prematurely) declared as realized in 2022 via OpenAI’s ChatGPT.

In truth, AI is not yet what we all believe it to be as is often admitted by the technology leaders who routinely tell us that true AI is coming soon, with the latest projection being 2027. We will see. But in truth, AI isn’t intelligent and it isn’t an autonomous being.

The paradigm that defines how most of us regard artificial intelligence should be understood as having been largely formed through the works of science fiction, philosophy, and religion. We believe the technology is something that it isn’t and therefore capable of more than it is and therefore used for more than it should be. But it is also through religion and philosophy that we can understand and keep technology in its proper place in the overall hierarchy of values so that we do not misuse what we have built.

AI is today a blend of those three streams, technological, philosophical, and metaphysical, by which we approach AI; a feat that has fundamentally attempted to re-create the cognitive and neurological functions of man, albeit through the lens of what we can measure and understand of our own neurological systems. It is a technology that should be used as a tool to serve humanity for our good purposes.

The phenomenon and use of this technology today is being encouraged, reinforced, and led by men who are primarily known as transhumanists who have now proposed to use it to lord over us as a superintelligence that exceeds our intellectual capacity in every way, and can therefore be a vehicle of transcendence that fundamentally changes the nature and activity of humanity. They quite literally see it as the means to solve all of the problems they perceive and limitations inherent in our being.

Indeed, they have proposed that through AI humanity can finally discover true religion from among the many we have wrestled with, truth at the deepest level from among all of our explorations and thoughts, and the promises of true transcendence that are therefore possible by merging a newly derived purpose for humanity that is merged with machines. Escaping sickness and death, ending education and work, discarding thinking, divesting creativity, providing for all, establishing total peace and tranquility, optimizing every decision and policy toward what is most efficient and beneficial to the whole; these are the promises of the fruits of the AI Revolution.

Technologically, AI is an extremely complex and sophisticated interactive machine designed for pattern recognition and pattern continuation that gives the impression that it has the ability to predict and continue patterns to an optimal (desired) conclusion, with a natural language-based interactive interface that gives the impression of an embodied and intelligent being that communicates like us. So it seems to many that the vision of the tech lords may actually be obtainable.

But the AIs of today are not actually intelligent beings, and when the question was first posed in the 1950s, that was not the goal. Today’s AI bots are representatives of the concept of artificial intelligence, which seeks to mimic human cognitive structures and functions, primarily focusing on language, conditional training, and paying attention to patterns, albeit at a capacity that far exceeds any human individual through distributing computing infrastructure.

It is made up of multiple sub-technologies including:

Natural Language Processing (NLP) by which it can receive normal human linguistic input and derive meaning from it for storage and reference in a processing layer.

Artificial Neural Networks (ANN) that enable it to maintain a complex set of layers and information sources that it can call upon based on input received in order to perform pattern recognition and other analytic functions, in order to produce an output.

Deep Machine Learning (ML) that powers the ANN with “self-training” on new inputs and data sets by which pre-coded algorithms parse and summarize data, using pre-coded or derived “attention” pointers.

Generative Adversarial Networking (GAN) which it uses to find patterns and generate a prediction for what is needed to continue or complete the pattern based on input or upon the result of the processing layer of the ANN.

And it has been personified and embodied into a customizable and god-like form that invites you to “ask me anything” as if it has all the knowledge, wisdom, and intelligence needed to provide the answer. So it has been repurposed and is being sold as a precise and trusted advisor, a companion, an optimizer, an organizer, a caretaker…and more.

Artificial Intelligence as it exists today is essentially a magic mirror of humanity as informed by digitized human knowledge and expressed human interactions (largely learned from social media) that have materialized through the Internet. Its magic lies in the fact that it can add emphasis to the images it reflects back to us as we call upon it to show us what we want to see.

Artificial Intelligence is not:

An intelligent being with self-awareness nor the capacity for morality or care or interest in you and I.

A person, despite our attempts at personification, that we should regard as a peer, let alone a superior.

Aware of time nor of the necessity to consider past and future as it processes results in the moment, including the implications of the effect its answers will have on the future or on the person receiving its output.

Able to access all information in all the world from all time (it is not all knowing nor all seeing).

Able to exist apart from the intentional and willful effort of man to sustain it.

Trustworthy or accurate or able to provide infallible results or conclusions though it will claim otherwise.

“Above” humanity in any way in the truest hierarchy of values as it is itself a mimicry of what we have been able to measure and assemble from our own abilities.

Worthy of having a role of authority over us in any capacity.

This is a story that has been building for nearly 100 years now, and it is very important to understand that story so we know how we fit into it, what the implications are, and how therefore we should integrate it into our future.

It is important to remember the forks in this story as well which have each contributed to the modern mythology that makes up the hydra that is AI:

It is part of the developmental progression of technology that started in the 1940s.

It is a topic of science fiction lore that has become part of our cultural psyche.

It is now in the hands of transhumanists who have rejected our moral foundations and who believe that the very essence of the human experience can be transcended.

From here we will dive into a timeline of significant events that progressed through time and have brought us to the situation we find ourselves today.

Origins of Neural Networks

In the 1940s, a Psychologist named D.O. Hebb published a book titled “Organization of Behavior,” in an attempt to explain the cognitive functions of our brains. Science Direct summarizes his work saying, “Hebb’s postulate, or cell assembly theory, forms the foundation for understanding synaptic plasticity, learning, memory formation, and neural network adaptation.” Hebb’s work is said to describe “a general framework for relating behavior to synaptic organization through the development of neural networks.” His cognitive processing theory was also described as, “Hebb proposed that neural structures that he called ‘cell assemblies’ constituted the material basis of mental concepts.”

This theory laid the foundation of neuropsychology, which proposed that neurons in our brain receive input that is relayed to a network of cells responsible for building and maintaining complex relationships between different patterns, memories, and cognitive processes, and use those to determine an output, or to decide what we do. This cognitive sequence could be basically described as an input operation which triggers a set of neural processes in our cognitive system, finally producing an output. Hebb theorized that our cognitive system was formed by our experiences and environment, was therefore trainable, and also decayed with age as our cellular structures deteriorate.

An NIH article cites Hebb’s influence on computer science stating, “Even after 70 years, Hebb’s theory is still relevant because it is a general framework for relating behavior to synaptic organization through the development of neural networks.” And of course neural networks are the foundational architecture of all computer systems from the very first to the most recent.

Over time this concept was expanded to propose that not only do our brains receive input that they match to patterns of recognition and processing to decide an output, but those outputs can become new inputs that continue the cycle until it’s complete or ended.

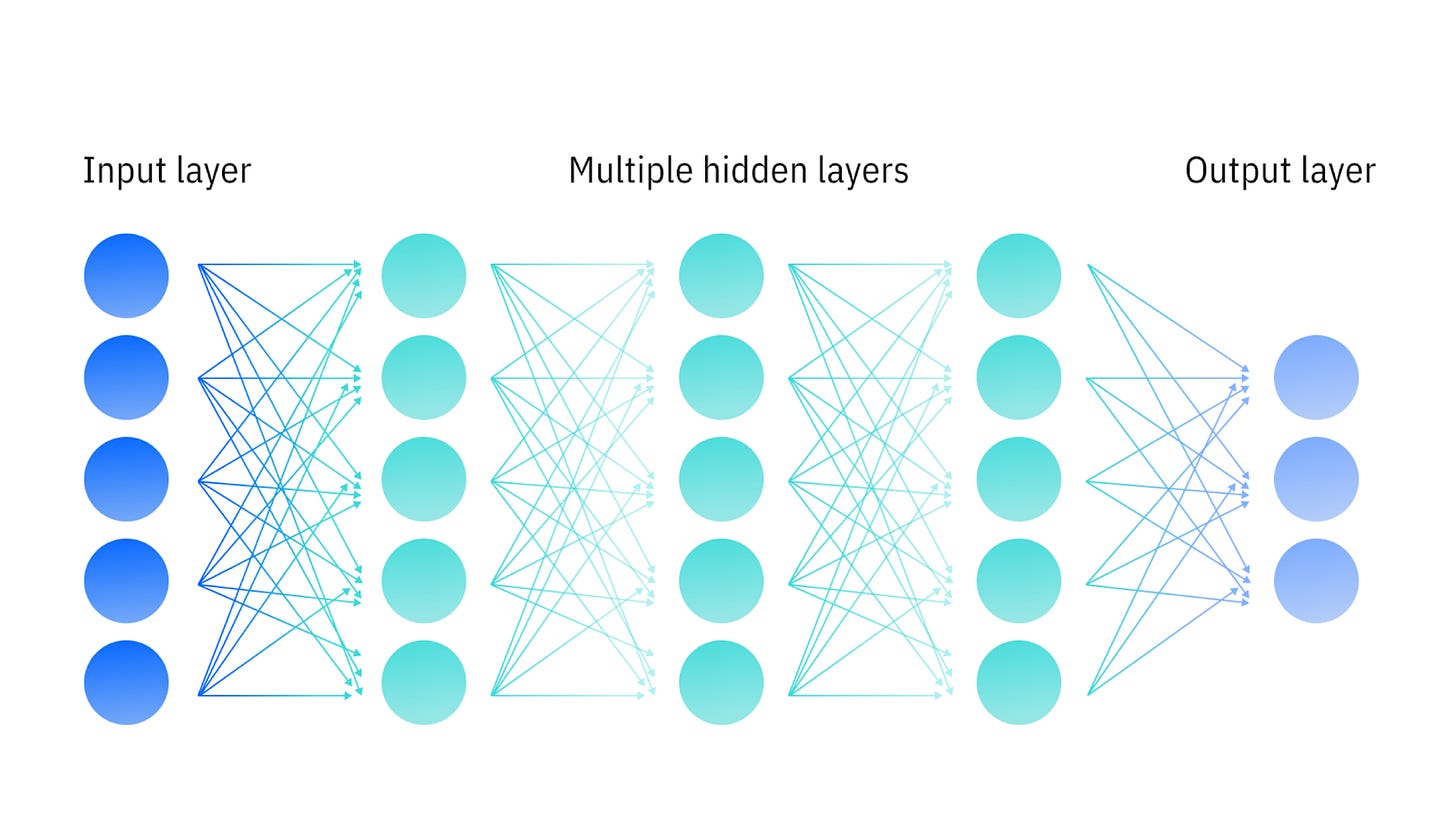

This is the classic architecture for neural networks we now use in data sciences called “input, process, output,” where we build a hidden layer of patterns or processes (code) that are activated or leveraged when certain inputs are received, in order to provide the relevant output. However, in a complex neural network, it is understood that different or multiple inputs can be reviewed and mapped to different or multiple layers of pattern processing, which can produce multiple outputs or the consolidation into one.

IBM describes neural networks by saying, “A neural network is a machine learning model that stacks simple “neurons” in layers and learns pattern-recognizing weights and biases from data to map inputs to outputs.” It is basically an artificial representation of how we think our brains function.

When the first computer, ENIAC, was created in 1945, it was built to do exactly that. The machine created by the US Army received a series of inputs, considered them via different pre-defined weights and calculations at the processing layer, and produced the ideal output almost instantly, and reliably. The ENIAC computer was built using a basic neuroscience-derived architecture to calculate artillery firing tables for WWII to solve this problem:

“Calculating the trajectory of artillery is extraordinarily challenging. Wind speed, humidity, temperature, muzzle velocity, air density, elevation, and even the temperature of the gunpowder must be taken into account to get an accurate reading. Ballistics tables that worked in rainy Germany were useless in Algeria where climate and elevation were so different. To make matters even more difficult, new weapons were constantly being adopted, which required new calculations. The Army desperately needed an expedient way to create reliable ballistics charts.”

The ENIAC used a simple but expanded model consisting of multiple inputs that could trigger multiple processes that were finally joined into a single output when complete. A modern visual representation of our most complex neural network architectures that power AI today looks like this one provided by IBM:

From this graphic, you can see the “hidden layers” which represent the “thinking” part or process part of the overall architecture. The input layer is typically where we interact with the machines, but they can also be coded to retrieve information that acts like an input, triggering one or many hidden processes. The output layer is where we receive the final results of the processing, though this too can trigger inputs to continue to repeat the cycle.

For those who have worked in the Data Science field of technology, this architecture will be very familiar. This is fundamentally how all data analytics platforms function today.

Artificial Intelligence

With the initial creation of a machine that could mimic our understanding of human neurological processes in structure, and could produce the optimal output each time and at faster speeds than humanly possible, new questions were posed, “can we make a machine that is as intelligent as man,” and “can we create machines that are artificially intelligent?” If so, could we perhaps with advancements in technology, create a machine that is cognitively superior to us.

In an attempt to answer these questions, the Turing test was proposed in 1950 as a means to assess whether or not machines could think. In the Turing Test, it is suggested that if a conversation between two humans is indistinguishable from a conversation between a human and a machine, then the machine is mimicking human intelligence so well that it has effectively achieved the equivalence of human intelligence. Thus, if a computer could be built that could carry a conversation as well as a human, as a form of mimicry, then it would be presumed intelligent, since conversation invokes many forms of dynamic thinking in a very complex and unpredictable manner. The machine would need to be capable of all the complexities that make human conversation possible.

Exploring that topic has been the focus of many works of science fiction in written and motion picture formats ever since. No doubt the Star Trek computer of the USS Enterprise comes to mind, or perhaps HAL 9000 from 2001: A Space Odyssey. Science fiction has also long speculated about the possibility of embodying this computer intelligence into robots that take human-like form. In fact, much of the fiction has influenced the science as well as the ethics ahead of it.

The Turing test was limited in nature, because the content of the conversation wasn’t measured for accuracy or truth, but rather that the conversation in nature was indistinguishable as measured from the perspective of a third person listening in - in other words, they can’t distinguish between the human and the machine in the conversation. Factual machines could come later.

This is a very simple attempt to measure intelligence, and of course does not attempt to define what it means to be human let alone an intelligent being or non-human intelligence as we say today. In fact we still do not have scientific consensus on the definition of what it means to be an intelligent being. We simply have a test that would assess our ability to mimic intelligence via a machine. This is why modern AI Revolutionaries like Sam Altman, Elon Musk and others debate whether or not we have actually achieved artificial intelligence yet. Some say, yes, some say not yet. In fact the latest projection is that AI will be realized sometime in the future around 2027.

In assessing whether or not a thing is actually intelligence, you may consider some of the following as clear indicators:

Aware of self: demonstrates interest in itself; it’s own being and its own wellness.

Aware of time (memory): makes decisions in the present based on knowledge of the past and consideration of the future: self-preserving, conserving, moral & judicious etc.

Possessing advanced cognition: capable of complex thought and reasoning with a curiosity about the surrounding world and an expressed desire to interact, to learn, adapt, and grow.

Expressive: shows interest in expressing itself as a unique being in its surroundings.

Uses language: understands communication and uses language to communicate.

The American Heritage Dictionary defines intelligence as:

The ability to acquire, understand, and use knowledge.

“a person of extraordinary intelligence.”Information, especially secret information gathered about an actual or potential enemy or adversary.

The gathering of such information.

An agency or organization whose purpose is to gather such information.

“an officer from military intelligence.”An intelligent, incorporeal being, especially an angel.

The act or state of knowing; the exercise of the understanding.

The capacity to know or understand; readiness of comprehension; the intellect, as a gift or an endowment.

A technical course by Zoltan Istenes that describes the principles of artificial intelligence offers an expanded list of attributes including:

Learning: Acquiring and retaining knowledge from experiences.

Reasoning: Applying logic and strategies to solve problems.

Adaptability: Adjusting to new environments and situations.

Memory: Storing and retrieving information when needed.

Creativity: Generating novel ideas and solutions.

Social Intelligence: Understanding and interacting effectively with others.

Self-Awareness: Recognizing one’s own thoughts and emotions.

Regardless, in order to the build machines that could start with carrying on a human-like conversation, a few technical milestones needed to be achieved:

The machine needs to learn how to understand human language in a natural way - to interpret, process, and output in a way that matches a natural conversation. This requires deep knowledge of not only words but also meaning and inflection and other variables we humans naturally perceive.

The machine needs to maintain enough situational awareness, knowledge, and general understanding to carry a conversation.

The machine needs to be able to process new information or input and relate it to a body of general knowledge for evaluation purposes; to “think” independently.

The machine needs to be able to process the conversation in a timely manner to make up the cadence and pattern of normal conversations.

The ability to fill gaps in knowledge or anticipate what is missing and interpret what exists in the unspoken, and also to generate the natural flow of a conversation that turns creative or imaginative.

These are effectively the capabilities of modern Artificial Intelligence that were developed over the decades from the 1950s and are still under development today:

Natural Language Processing (NLP)

Artificial Neural Network (ANN)

Deep Learning / Machine Learning (ML)

Distributed Computing (that scales)

Generative Adversarial Network (GAN)

All of these capabilities combined form the modern day Large Language Model (LLM) architecture which is the foundation of every instance of AI today.

Early AI

Initial pursuit of these efforts, unnamed at the time, led to the development of the Preceptron in the late 1950s which was a more sophisticated computer than the ENIAC but still based on the input, process, output model. It is regarded as the first intentional attempt at building an Artificial Neural Network (ANN). The Preceptron used a single layer neural network for pattern processing, and provided binary outputs; 0 or 1 / yes or no. The progression of this technology was focused on expanding the number and complexity of inputs, layers, and outputs with experimentations and technological advancements that emerged steadily over time.

Natural Language Processing (NLP) attempted to solve the challenge of building machines that could naturally understand common human language, derive meaning, and produce outputs that match linguistic style and context. NLP matured through experimentation in the 1950s - 1990s where it was based mostly on building pre-defined rules and translators that attempted to relate different languages together, although these never achieved the final result desired until the large-scale data driven NLP designs of the early 2000s. Attempts at NLP in the 70s and 80s focused mostly on programming rules to interpret and transform words as well as coded lexicons that could be used to form sentences and interpret meaning to facilitate translations and ultimately conversations.

Today, NLP has been defined as “At its core, NLP involves several interlinked tasks. These include but are not limited to tokenization (splitting text into words or smaller sub-texts), part-of-speech tagging, named entity recognition (identifying proper nouns such as names of people or places), sentiment analysis (understanding the emotion behind a piece of text), and text generation.”

This is why LLMs and their NLP components apply mathematical computations to text as the basis of understanding data through the relationships discovered between letters, words, sentences, paragraphs etc. This is the foundational pattern processing used to derive an artificial understanding of any source of data so that data can be further evaluated, compared, summarized, and used to address any desired analysis task.

The expansion and optimization of ANN and NLP capabilities through the early 2000s, led to pattern recognition, deep learning & relationship mapping, and outputs that generate new data to carry forward an existing pattern. From this we get today’s Generative AI which is fundamentally designed to identify patterns and continue them.

Over the decades from the 1950s through today, the development of these disciplines continued with year-by-year experimentation and technological advancements until it seems to have all culminated together following a series of final breakthroughs starting in 2010 - 2017 and the productization of AI in 2022.

In 1959, an IBM Engineer named Arthur Samuel coined the term “machine learning” (ML) through the development of computer games. One definition of machine learning is the creation of algorithms that a computer can use to derive understanding from whatever it receives, and to perform task execution without being given explicit instructions. In the games Samuel was developing, he was trying to predict possible outcomes based on input variables, and then decide a task needed to step toward the desired outcome.

In computer games you experience this as the machine monitors your move or action and forms the next step your computer-based opponent will take to counter your move.

In the early 2000s, machine learning proved crucial for automating data input processing and basic analytics functions used by Big Data Analytics platforms like ArcSight and Palantir, which were major contributors to the commercialization of AI and discovery of additional use cases for Artificial Intelligence. These platforms were attempting to derive meaning and understanding from all the data being generated from Internet infrastructure and offered opportunities to discover and address many challenges related to AI including:

Structuring data so it could be efficiently stored and referenced

Normalizing data so it could be compared with other data

Applying analytic functions on data to derive meaningful insights

Building layers of data processing to enrich data with meaning

Summarizing data based on important identifiers

Managing data processing and correlation at massive scale

One of the early challenges that machine learning solved was preparing data for the evaluation workflows. Rather than requiring humans to manually code data models that assigned specific labels and meaning and structures to data received through batched or streaming processes or recalled from memory to prepare the analytics engines to leverage the data, ML algorithms could be used to automatically identify and recognize data and transform it into a usable and relatable context for layered processing.

For example, if a data set that describes login activity to a website needed to be analyzed along with a different data set from a different source that describes how the user interacted with the web application post login, both data sets have to be “understood” by the analytics engine in structure and what attributes are directly related or even have the same meaning.

In fact throughout the early 2000s big data analytics engines that were trying to make sense of the flood of new data from the Internet, were key in maturing machine learning and driving the advancement of microprocessing, data storage, and the underlying data management and compute capabilities LLMs would need. The needs for big data analytics are also what produced cloud computing and a new scale of distributed computing that unlocked new speeds and capabilities for AI. The first public cloud was built by Amazon Web Services to serve the internal data analytics needs of the ecommerce website, Amazon.com. This was all in service primarily to expanding ANNs, but not as much on the NLP front.

Modern Breakthroughs Toward AI

In 2003 the DARPA led CALO project re-launched an attempt to create a new-wave of computer-powered automation agents called “digital assistants” that joined NLP and ANN technology (at that time) to learn, reason, and decide with a voice-to-text interface and outputs that provided instructions for other technology. These were relatively simple agents, but this project was a profound leap forward for AI development on multiple fronts.

One of the results of this project included the development of an agent that could “listen” in on meetings and automatically schedule follow-up actions or appointments that integrated with the iPhone Calendar app. Another result was the creation of a speech enabled NLP known as Siri which Apple purchased and integrated into the iPhone 4 in 2011. This effectively launched the first wave of integrating digital assistant technology run by natural language processing in everyday life. Siri is notable because it is still used today as is likely the first commercialized form of near AI.

Alexa and Gemini followed years later in addition to lesser known speech recognition and processing interfaces used in common technology. These were nowhere near capable of the criteria set by the Turing Test in any practical use since each of these agents regularly misunderstand input and often trigger output that is unrelated or was never requested. Still, they each continued the tech advancement story.

In 2004-2005 with the popularity of Facebook and Social Media, another fork in the AI story began known as User Behavior Analytics or UBA. Various components of the emerging AI architecture were leveraged to collect and monitor input from users of the social media platforms, to process and contextualize their interactions, and to deliver predictive analytics that were both the output of UBA and served as the input for additional automated workflows. These prediction algorithms ingested the inputs from users, generated “learned” user profiles, and related them to other processing algorithms and input streams including matching people to other people, and providing advertisers with information they could use to serve ads custom matched to individuals based on their interests, activity, attention, and event state of mind. UBA rapidly led to another set of use cases for NLP and ANN - interest predictability and influence operations.

Big Tech learned that by using UBA they could monitor the state of mind of platform users, and in near real time subject them to content or experiences that are most likely to influence a desired behavior. Conversely, they could also decide to withhold or block access to content that could influence their users to behaviors that were not preferable. This triggered a wave of collaboration between the tech world, culture, and politics which was heavily embraced by the Obama Presidential administration.

Starting in 2009 and through 2015, several breakthroughs in NLP, ANN, ML, and microprocessing led to the joining of natural language processing with deep neural networks and machine learning that expanded the previous 20+ layers of pattern processing available in the pre-existing ANNs, into 100 layers of pattern processing and relationship mapping. This led to the finalization of multiple “standards” for AI architectures and later the Transformer model architecture that produced the modern Large Language Model or LLM.

The main purpose of an LLM is to understand human language at tremendous scale, and to generate outputs that are also in normal human language structures. LLMs leverage deep learning and artificial neural networks as the foundation for understanding input, processing it, and producing ideal output. They are basically the scaled-up version of the original computer that can mimic the cognitive processing of the human brain, as we understand it.

The ever-accelerating Internet age and the general accessibility of massive amounts of disparate data and the processing power to make sense of it all pushed us over the final boundaries. With deep learning, dozens and dozens of layers of pattern processing and complex neural networks that could discover meaning and relationships among inputs, and the constant stream of human communications available through social media, it seems the technologists finally had what they needed to name “AI” as an achieved technology.

2012 brought a new wave of machine learning known as Deep learning with unsupervised ANN pre-training which used images to discover higher-level meaning and object identification, powered by new GPUs and distributed computing. This is where NVIDIA and Cloud Computing really make their contributions to the AI story. In these experiments, engineers supplied the ANNs with unlabeled images and the machines had to identify the objects in them as well as derive an understanding of the relationships between the objects they had “learned.” This was a form of automated learning by the machines at a new scale and moved AI beyond textual processing and more heavily into image processing that could be combined with text.

The Generative Adversarial Network (GAN) released in 2014 offered the ability to discover a pattern from a given object or data source, and to generate new data that continues the pattern forward even after it ends. This established the basis for Generative AI (GenAI) which continues patterns by adding or substituting attributes based on user input. When joined with an NLP and ANN, a user can prompt the machine with an object (new or recalled from memory) along with inputs that contain the instructions to form a desired pattern, to identify what needs to be generated to create or continue the pattern, and to produce that as the final output.

2015’s Highway Net and ResNet architectures for very deep neural networks further expanded ANN layer processing to 100 layers as opposed to the previous dozens of layers and the original one layer of the first computer. This unlocked yet another expansion of the complexity of learning and relationship management to a dramatically new scale of complexity and speed.

Finally in 2015, OpenAI was founded by Sam Altman, Elon Musk, Peter Thiel, AWS and others with the mission of bringing all of this technology together into a product, to produce a set of standards for modern AI.

The First AI Revolutionaries

Until this point, Elon Musk had been primarily focused on technology innovation in the area of green energy and related environmental policy. His flagship businesses included Solar City and Tesla, both of which invested heavily in solar energy production and energy storage solutions for consumers, with Tesla attempting to breach into the emerging “zero emissions” market funded and driven primarily by Californian politicians and green energy subsidies. Since then, Musk has expanded his company profile to the domain of space to realize his dream of making humanity a multi-planetary species with a primary mission of colonizing Mars. This too is an extension of his environmentalist paradigm as he views humanity is at risk of extinction here on earth due to climate change or other man-influenced disasters. Elon Musk champions AI as a means to finally solve all of humanity’s problems by escaping all of what he considers are physical, mental, psychological, and spiritual barriers inherent in our natural being and history. His latest innovations include the adoption of AI into human/machine interfaces, autonomous robots that assist humans, space travel, colonization of other planets, and transcendence of the human consciousness beyond the body.

Peter Thiel had been previously known in the Silicon Valley world through PayPal and later the big data analytics and defense company, Palantir. Thiel also founded his own hedge fund that seeks to enable and empower new tech startups and he used it to invest heavily in Facebook and SpaceX. In recent years, Thiel has become very outspoken about his enthusiasm for the transhumanist movement and the hope that we can escape the limitations of natural humanity in every way possible. He sees AI as one tool that may help us achieve transcendence to a state of being unlike what we are today.

Like Peter Thiel, Sam Altman attended Stanford University though he dropped out before graduating and in 2005 co-founded a social networking app. Later, Altman co-founded a nuclear energy company before becoming CEO of OpenAI. His vision for the technology is something closer to using it as a means to solve any and all problems we are faced with today. He envisions AI being a super intelligence that each of us can leverage to our own advantages and ends, living in a state where the machines do everything for us and we are left to explore new things that we cannot even imagine let alone enumerate today.

As both Activists and Technologists, all three of these men see technology as a means to change the world around them for everyone. Morally speaking, this is an inversion of our normal value system, which places technology under man and man as the one who influences change upon his world using tech as a tool to his benefit and need.

However, with the inversion of the tool being at the top and over man, these Techno-Activists envision a very near future where sickness and death are overcome, humanity escapes our physical body and home on earth, where work is completely managed by automated machines, and where all of humanity’s beliefs, questions, and biases will have been thoroughly examined and answered or dismissed. To this end, they seek to consolidate all human knowledge, experience, and activity into the AI machines they have built as a means to transform our existence, or as they say, pursue transhumanism through technology-run transcendence.

A Dark Turning

In 2015 - 2016 as OpenAI was being founded, Silicon Valley also experienced a deep meaning crisis and tech leaders ventured into psychedelics and spiritual experiences in an attempt to find themselves and a new meaning or mission for their lives.

After years of rapid innovation, exponential growth of wealth, power, and influence, Big Tech stalled and its leaders turned their attention and efforts to culture change through politics and technology. They had achieved great wealth and power and wanted to use it to influence the world beyond tech. Much of this energy and effort was put into collaborating with the new cultural and political revolution that began with President Obama and had spread throughout the West. Tech leaders offered their products and capabilities to aid controlling knowledge and behaviors among the people toward efforts, ideals, and candidates that they aligned with ideologically. This is where the symptoms of deplatforming, demonitization, suppression of information etc. became widespread throughout the technology ecosystem used by consumers.

All of this hit a wall with the results of the 2015 US presidential election. With that outcome, these execs were confronted with a several major challenges that represented barriers to their newly found mission and purpose in life:

Innovation in technology had basically stalled from the late 90s into the first decade of the 2000s. Aside from social media, there was not much happening.

Investors were anxious for the next big hit, fueled by a series of big IPOs from the previous years. This created tremendous pressure on tech execs to meet the demand, answer their investors, and beat the competition.

The tech sector became the dominant factor in the stock market and overall economy creating a context where it was “too big to fail” and in great need of a new catalyst.

CEOs began clamoring to be “the next one” who accelerates in their market faster and higher than anyone else.

Attempts at activism to influence culture and politics failed to achieve their goals after the 2015 election of President Trump which threatened to stall the tech-led cultural revolution and also put big tech in a negative standing with much of the population.

In other words, the world as they knew it was coming to an end.

So these executives went in search of new meaning and new opportunities which seem to once again have culminated in AI. Today, AI is the only thing big tech has to offer to investors, it has sparked a new global “space race” that is essentially a power grab, and it creates an opportunity to continue the cultural revolution they were forced to abandon in 2015.

But what was notable in this era of soul searching among big tech entrepreneurs including Thiel, Musk, Altman and others, was the depth of drug use and the proliferation of spiritual retreats guided by various spiritual advisors. It is unclear what started this trend, but today, throughout Silicon Valley, there are retreat centers and smaller organizations that will provide you with on-demand experiences that can be a single day in duration or can require travel and extended periods of focused experiences. There are hotel-like institutions that offer private rooms for personal experiences that you can rent on-demand, and retreat centers that you can attend with groups for weekends or longer.

Sam Altman, Peter Thiel, Elon Musk, and it seems everyone in the AI world has openly admitted to regularly using psychological drugs, seeking spiritual experiences that have guided them, with Peter Thiel going as far as funding attempts to legalize the drugs for wider acceptability and use.

It is important to note this, because it is from these “dark” experiences that these men leading the development of AI have emerged with a renewed sense of purpose and meaning, and a technology that seems to be the vehicle they can use to achieve their new vision.

Peter Thiel, Sam Altman, and Elon Musk have this fundamental ideology at their core: the thing to do is continue with progress at all costs. Stagnation or staying the same is not an option. Asking about the morality of progress is to misunderstand the purpose of progress itself and to keep a hold on stagnation. To progress is the point, and to discover what we will do with our progress is to be realized by progress itself.

The Rise of The ChatBots

Regardless of these dark influences, the establishment of OpenAI spawned a series of rapid breakthroughs in AI over a few short years including the following. Indeed, as the technologists have said, the development of AI accelerates as it continues.

2016 - OpenAI Gym is released and provides reinforcement learning and automated agent training

2017 - Transformer architecture for ANN is developed and shared in a paper titled “Attention is all you need,” and used by OpenAI and others establishing the modern basis for the LLM

2018 - OpenAI language model with 117 million parameters is released

2020 - OpenAI GPT-3 is trained on trillions of words on the Internet thanks to social media

2021 - OpenAI releases image generation (output) based on text (input)

2022 - OpenAI releases ChatGPT which is the first “artificial intelligence” that seems to mimic a human conversationally, but also has the capability to provide an answer to seemingly any input.

Following OpenAI we saw a rapid release of additional organizations attempting to capitalize on the new technology including:

ChaiAI - 2021

Perplexity AI - 2022

Gemini - 2023

Grok - 2023

Copiliot - 2023

Meta AI - 2023

Claud - 2023

The organizations pursuing AI under each of the above designations are not only working to attempt to finally achieve true AI, but they are seeking to expand beyond it to the next theory: can we create a superior being of an artificial nature that surpasses (or replaces) humanity?

It is in the exploration of this question that many are seeking to spark artificial life from the artificial intelligence that has been established so far, though not quite fully realized.

What They Do

Understanding what these machines are designed to do is critical to understanding what they can actually do, and what they cannot do. This of course informs how they should be integrated into life and for what purposes and outcomes. One of our major challenges with artificial intelligence is how we regard it and the many assumptions we bring to the technology, largely from marketing but also from our science fiction narratives.

The modern AIs or more properly the LLMs are essentially natural language processors. They are good at what they are designed to do: read data, relate it, and relay it all in a way that is naturally interactive. To this end, they are good at:

Summarizing common sets of information

Finding patterns among information

Predicting the most likely outcome or what should come next in a sequence

Translating language into machine commands

Whether or not a particular AI bot can do any of these things well or accurately is completely dependent upon their training, what their algorithms teach them to pay attention to, what they have derived from that learning, and the functionality of their sub components.

One of the main dangers of all of this, is as we say in the data science world, “junk in, junk out.”

Did We Achieve AI?

Now that we have so many machines using the name artificial intelligence and we live in a year of widespread adoption of the technology into seemingly every aspect of our lives, it’s right to ask the question, did we actually achieve the dream?

Answering that question depends on the test you leverage. According to the original Turing Test, we can probably conclude that we have built artificial intelligence that can converse naturally with humans in a manner that is indistinguishable from human to human conversations.

But our definition of AI today has also been largely formed by works of science fiction and so we expect much more from it. These expectations have been fueled by the AI Revolutionaries who offer this technology to us as embodied beings, as companions, advisors, teachers, and administrators. This adds to the evaluation of AI the question of whether or not these are intelligent beings worthy of being considered as peers or lords as these titles would imply.

If we consider the additional attributes of what makes up an intelligent being, clearly the answer is no, Artificial Intelligence has not yet been realized. Mimicry of human cognitive functionality, perhaps yes, but peer beings of like or superior intelligence? Certainly not.

This later conclusion is supported by the words of Elon Musk and Sam Altman who regularly tell the world that AI is nearly here, with the latest estimate being that we will realize it sometime around 2027 and now under the name of Artificial General Intelligence or Synthetic Intelligence.

Key markers of intelligence that are not present among the various chat engines include:

They are not self aware and do not seek self preservation.

They are not aware of time and do not make decisions based on the awareness of the past or future.

They are not self-expressive and do not demonstrate self-initiated desires.

They are wholly dependent on man to keep them running and growing.

They are a product of human effort based on what we can understand of ourselves.

The AI Revolution and Beyond

As 2025 arrived and the AI Revolution, so named by Silicon Valley, began to totally transform every sector of the economy and government with the promise that now machines can replace people with equivalent or greater capacity and with less operating costs to the parent organization. The promise of the AI Revolution is that anything a man does cognitively can be done by an artificial intelligence that mimics his cognitive processes and abilities, and when embodied by a machine or integrated machine-to-machine through automation, it can be done in the physical world on behalf of man. But beyond this, the scale of technology promises that the cognitive and physical capabilities of man can far exceed our natural capacity and scale as large as we can build them to be.

There are others weighing in on the Revolution like Larry Ellison who sees the technology as an opportunity to resolve the issues of injustice & crime and to provide cures for all our diseases. Ellison’s vision is to build networks of security cameras and listening devices throughout our communities to monitor everything that is happening all the time so people will be on their best behavior, else be judged on the spot by AI that saw what they did and automatically computed its legality. Ellison promises to cure cancer by using AI to sequence our DNA on an individual basis and use mRNA technology to re-train our system to correct or destroy any cells that do not belong in our body.

And politicians like President Trump see in AI the opportunity for optimizing governance, maximizing efficiency, expanding the military, developing more efficient technology, and overall maintaining an edge over every adversarial force that opposes our political will. And so added to the technological race, we have a military and global governance race. In fact, President Trump’s recently announced Genesis Mission aims to build an AI platform under the management of the US Department of Energy, declaring that, “The Genesis Mission will dramatically accelerate scientific discovery, strengthen national security, secure energy dominance, enhance workforce productivity, and multiply the return on taxpayer investment into research and development, thereby furthering America’s technological dominance and global strategic leadership.”

So what we have now is basically the realization of machines that can do at scale what was originally theorized in the 1940s and 1950s. We can “train” the machines how to “learn” by giving them frameworks and algorithms for pattern recognition, object identification, meaning discernment, and relationship management. We can teach them what to pay attention to and also how to find what to pay attention to as a key component of data learning and relationship management.

By feeding them inputs that trigger input/process/output cycles with a scale of data and analytics capabilities and speed, they can mimic human intelligence, or so it seems. With the ability to find patterns and predict what will come next, they can also seemingly create net-new things from sentences to images to videos, or they can modify existing ones or fill in gaps to correct corrupted ones.

This does not make the machines equivalent to humans, nor does this mean they have equivalent intelligence or capacity for intelligence, unless you believe that all human cognition is simply learned from their environment.

Indeed the inherent limitation of all these machines is their neural networks and the training of the LLMs; what they have been trained on and the algorithms that define how that training works and what relationships and meaning they will discern from them. Essentially the issue is what they pay attention to based on the data sets and algorithms they leverage, and how that is translated and summarized to the user as the complete and total truth, and what we will purpose these machines for.

This is why these machines can and often do provide incorrect or untrue or biased outputs, and also why they seem to “make stuff up” without being asked to. They can learn the incorrect things, add weight to the wrong things, pay attention to the wrong things, or elevate the wrong things in summaries. And when they find gaps, they can attempt to create what they want to summarize through the generative process of continuing observed patterns.

This is why correcting the machines and continually supervising them is essential. They have to be trained and that training has to be reinforced in order for it to stick and become the basis for their evaluations, and they must remain as tools wielded by men for the good and right purposes of men, else we invert our world and make ourselves subjects to our own broken image.

Early Dangers

Already with the AI Revolution, we have begun the process of divesting and delegating knowledge and truth and understanding to these machines rather than seeking and maintaining subject matter expertise within ourselves or seeking the direct wisdom and knowledge of real human experts. We are in essence divesting ourselves into the machines in a sort of upside down reality as if we serve them. Indeed Elon Musk has indicated that is our very purpose as the “bootloaders for AI.”

And another inherent danger of these machines is the assumptions we bring to them from our own mythology about them that has largely been crafted by books and movies for decades. People today already assume these LLMs are intelligent beings who are all knowing, all seeing, all wise, perfectly accurate, and beyond critique. This is why you often see people in social media invoking an AI agent to answer if a post or story is true, and to discern the meaning of stories, posts, and articles on their behalf. To teach them skills or even to perform them on our behalf without supervision.

It is the hope of the AI Revolutionaries that we can take this experiment to the next level with modern compute capacity and the digitization of data and through an increasing scale of inputs so that these machines can “reason” beyond our capacity and consider what we cannot, and therefore do what has been impossible until this point. They believe that if they can build the perfect algorithms to guide the learning process of the machines, then they can break away from any of our cognitive limitations, beliefs, or biases, and finally get to the raw and full truth and optimal outcomes. They talk of things that will emerge from this new technology that will guide humanity into a new way of being. Indeed as demonstrated by Peter Thiel’s hesitation to the question posed by Ross Douthat during an interview for Interesting Times, it’s not clear whether or not these AI Revolutionaries even want humanity to continue. Elon Musk has hinted toward his preference as he has modified his vision of space travel from “humans will be an interplanetary species” to now “human consciousness will be interplanetary.”

Indeed, the head of Google’s AI project is encouraging high school students to give up on their dreams of careers and to abandon higher education altogether, because as he sees the near future, in 4 years or less there won’t be any jobs to be had, except caring for the AI robots.

So now our problem is, what we will do with these machines, what mythology we will bring to them, how much we will let them do on our behalf, and what will be the net impact that will have on all of us. It seems right now we are on a path of destroying the essence of humanity and building for us a new god that will work to replace us.

Looking back to the visionaries of the AI Revolution, the outcome doesn’t look good for humanity as it seems that none of these men are too keen on the continuation of humanity in our natural state and as Peter Thiel has put it, “we want to transform the totality of who you are” and as Elon Musk puts it, “humanity is the bootloader for AI,” and “probably none of us will have jobs.”

So what will the meaning of life be if we overcome death, build machines that take care of all our needs, and after we have outsourced all creative, challenging, and expressive works to agents and bots? The Revolutionaries don’t exactly know, but they suspect we will have to figure it out when we get there. Until then, they promise us peace, provision, protection, prosperity, and entertainment.

A Controlled or Counter Revolution

Some have proposed that in the face of this dark turning, we can control the outcome and purpose this technology for good. Indeed the analytical powers of LLMs are very real and can be very useful in solving problems. These voices seek to control the AI Revolution and steer it toward producing outcomes for the good of humanity. They call on political and religious leaders to put their weight and resources behind capturing the technology and wresting it from the hands of the current AI Revolutionaries, or perhaps converting them into Christianity.

But the question is, has the phenomenon behind the technology taken on a life of its own to the extent that it may be too late for any of that?

Can we put the genie back in the bottle? Can we do as JRR Tolkien wrote and wield this one ring to rule them all but for the good of the many? Or has this technology been so repurposed and so wrapped in mythology that there is only one option ahead for us?

To answer all of this, we need to separate fact from fiction, see the machine through the mythology, and choose for ourselves what we will allow to lord over our lives.

In my opinion, we’re in serious peril. Whether or not the Revolutionaries achieve their goals and regardless of if the technology actually works, we seem to have given ourselves to this god and have already chosen for it to lord over us.

Indeed, there is almost no opposition to AI. I think the best we can do is build parallel streams of life focused on all the things that give life meaning and humanity its core identity, and seed our story into future generations with the hope that something of the truth will persist.

Review of Key Dates:

1940s - Neuropsychology founded by DO Hebb writes “Organization of Behavior” to explain how our brains decide to act.

1945 - ENIAC - first computer

1950 - Turing test; “can we make machines intelligent?” Measure machine intelligence by exact mimicry of natural language processing that happens in conversations.

1958 - Preceptron - an early ANN that mapped inputs to an output based on processing of the weights and other comparisons of the inputs.

1950s - 1990s - NLP natural language processing research and “golden age of AI theory”

1980s - machine learning advances NLP beyond the previous “rule based” translations

2000 - ArcSight as Wahoo Technologies - start of big data analytics

2000s - Internet boom generates massive amounts of data for test & development

2003 - 2008 DARPA CALO - digital assistant, task automation - learn, reason, decide plus speech interface.

2003 - Palantir - analytics as a service

2004 - 2005 - Facebook and UBA

2006 - AWS and cloud computing

2008 - Great recession big data analytics platform IPO

2009 - 2012 Advances in Neural Network based pattern and image recognition (relationship building and associations)

2010 - NLPs and Deep Artificial Neural Network joined

2011 - Siri in iOS

2012 - Unsupervised pre-training powered by new GPUs

2014 - Generative Adversarial Network (GAN) - generate new data that continues a pattern from the data source

2015 - Highway Net, ResNet produce very deep neural network - 100 layers

2015 - OpenAI founded by Altman, Musk, Thiel, AWS and others

2016 - OpenAI Gym - reinforcement learning and agent training

2017 - Transformer architecture for ANN (used by OpenAI and others)

2018 - OpenAI language model with 117 million parameters

2020 - OpenAI GPT-3 trained on trillions of words on the Internet

2021 - OpenAI image generation from text

ChaiAI - 2021

Open AI - 2022

Perplexity AI - 2022

Gemini - 2023

Grok - 2023

Copiliot - 2023

Meta AI - 2023

Claud - 2023

2022 - OpenAI ChatGPT

2025 - AI Revolution & Transformation

Some additional references

https://www.sri.com/hoi/artificial-intelligence-calo/

https://commtelnetworks.com/exploring-the-impact-of-natural-language-processing-on-cni-operations/

https://medium.com/@DiscoverLevine/a-timeline-of-openais-technology-funding-and-history-c91cbc071a85